Johanna Reich, Hynitha | Jeto Sekän

JOHANNA REICH

Hynitha | Jeto Sekän

2025

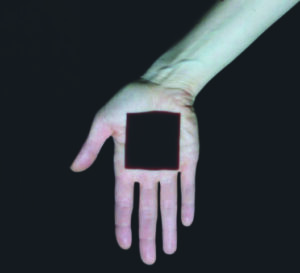

Indian ink on canvas with LED-Display, generated poem

50 x 60 cm

Unique piece

When we look at a painting, we perceive colours and shapes that have a certain effect on us. We refer to this as "visual language." But what if pictures could speak or form words? What could the language of images look or sound like? What if this language could be translated into our human language? These considerations are based on a very personal approach: I myself am neurodivergent, I am a synaesthete. "Neurodivergence" refers to individuals whose brains function differently from what is considered typical, encompassing conditions like autism, ADHD, dyslexia and more. For many years, I saw this as more of a problem in everyday life, as I was constantly suppressing sensory impressions such as colours when reading or sounds when seeing so that my brain wouldn't get too tired. The possibility of working with language models gave me the idea of opening up this very personal world. What could the translation of colour into written or spoken language look like? Would it be possible to develop my own language?

The dream of a language designed specifically on the drawing board is an old one; there have been repeated attempts to invent a universal language, and planned languages such as Esperanto, Solresol or Interlingua have been created. The most common newly developed languages today are programming languages that issue commands and instructions. Languages shape our thoughts and actions, they change us and our world.

In collaboration with an A.I., I have begun to develop a written and spoken language of images. Since neuroscience has been working more and more on the brain-computer interface, it has become increasingly clear that human brains are not standardised, but unique with countless small differences. My current work is based on one facet of these differences, my synaesthesia: This means that, for me, each letter is directly linked to a specific colour perception as well as a sound. The "A" has a certain shade of green, the "B" a shade of blue and these colours sound. This individual neuronal peculiarity of the interpretation of the world becomes an onomatopoetic language of images.

I used this letter and colour information to populate a language model. The language model then generated its own onomatopoeic language. If a coloured image is now "read" by the A.I., colours and shapes can be translated into words. The language was and continues to be created in many small steps in collaboration between computer and human. It is still very limited in its vocabulary and is generated in verse form.

The current series is called "HYNITHA (Whisper)." The words of a short onomatopoeic poem flash behind ink drawings on canvas. The short poem can be translated into our human language.

Reviews

There are no reviews yet.